Enhancing Google's Core Functionality Over Emphasizing Artificial Intelligence

Enhancing Google’s Core Functionality Over Emphasizing Artificial Intelligence

Quick Links

- Google Goes All In on Generative AI

- Google Is Still Seeing What Sticks

- Consumers Need a Focus on Data Security More Than Gimmicks

Key Takeaways

Justin Duino / How-To Geek

Google introduced new AI features but focused more on hype than substance and practicality.

- Gemini is Google’s new generative model taking over as the default assistant on Android phones.

- While some features like Magic Editor and Call Notes seem gimmicky, data security remains a valid concern.

Google unveiled the latest iterations of its burgeoning AI ecosystem at the Made by Google event held in Mountain View on Tuesday, a collection of generative features that somehow managed to be simultaneously too much and not nearly enough.

Over the course of the hour-and-forty-minute live-streamed event, a bevy of Google executives took to the event stage to sing the praises of its Gemini large language model and show off its performance prowess in a series of live demonstrations—most of which went off without a hitch. The company pushed its AI achievements first and foremost, with the Pixel 9 , Pixel Buds 2 , and Pixel Watch 3 , only making their debuts in the second half of the event. This marks a stark reversal from just a couple of years ago when, if AI was ever mentioned, it was only in passing during Google’s traditionally software-focused press conferences and live events.

“It’s a complete end-to-end experience that only Google can deliver,” Rick Osterloh, Senior Vice President, Platforms & Devices at Google, told the assembled crowd. “For years, we’ve been pursuing our vision of a mobile AI assistant that you can work with as you work with a real-life personal assistant, but we’ve been limited by the bounds of what existing technologies could do.”

In order to exceed those limits, “we’ve completely rebuilt the entire system experience around our Gemini models,” he continued. “The new Gemini system can go beyond understanding your words to understanding your intent and communicate more naturally.”

Google Goes All In on Generative AI

Google is racing against industry rivals like Apple, OpenAI, Microsoft, Amazon, and Anthropic to maintain its AI leadership. It has integrated Gemini’s generative functionality into its Workspace app suite, allowing the machine learning system to leverage user data (specifically from Google Docs, Slides, Gmail, Drive, and Sheets) for personalized responses. Users can generate text and images for Google Docs, create presentation slides using natural language, or produce professional-looking reports based on Sheets spreadsheets.

![]()

Justin Duino / How-To Geek

But this is just the beginning of Google’s AI ambitions. At Tuesday’s event, the company announced Gemini as the replacement for Google Assistant, making it the default assistant on Android phones. This move aligns with Apple’s decision to enhance Siri with OpenAI’s ChatGPT . Gemini offers a more conversational and intelligent assistant capable of being interrupted without losing focus. The newly introduced Gemini Live feature enables real-time interactions with the AI, positioning Google to compete with OpenAI’s Advanced Voice Mode . Gemini will also eventually interpret phone screen content using an upcoming overlay feature.

Google Is Still Seeing What Sticks

I wish that were the extent of what Google has been working on. Still, as has been the case since ChatGPT made its debut some 18 months ago, tech companies just won’t stop trying to shoehorn AI into any and every product they make, regardless of whether it actually adds value to the user experience.

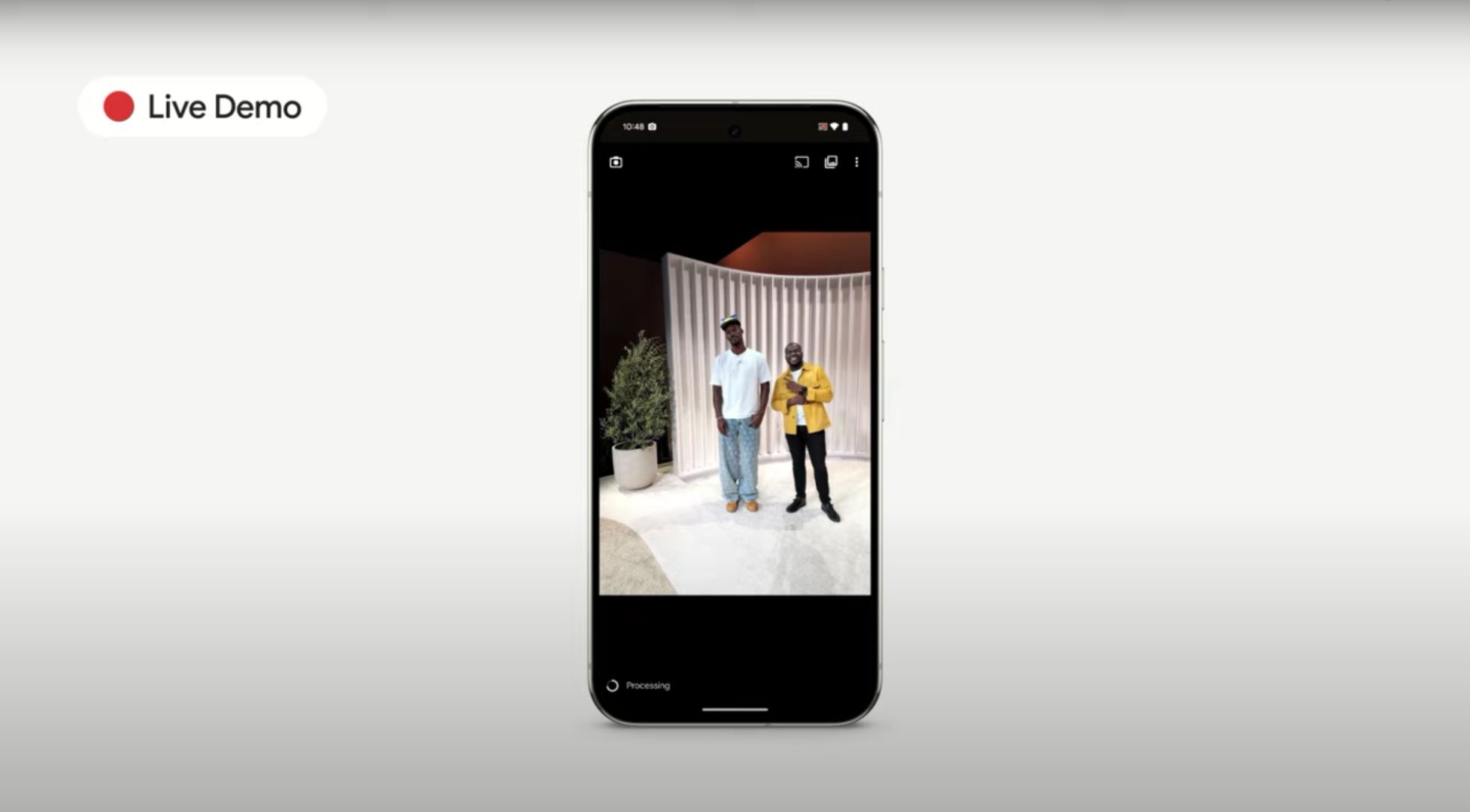

Take the new Add Me feature, for example. It allows you to merge two photographs—one of the larger group, the other of the lone photographer—into a single image. “One of the biggest problems with group photos is how to include the entire group at times,” Pixel Product Manager, Shenaz Zack, said onstage. “You end up taking a photo that you also want to be in. The new Add Me fixes that so you can be included in your family vacation photo.”

You could also just ask a passerby to snap a quick photo of you as people have been doing since the advent of cameras or use one of those new-fangled “tripods” that holds your camera at the appropriate height and orientation and can remotely click the shutter. At the same time, you stand with the rest of your group. Given how resource-intensive just running basic queries using AI is compared to conventional searches , I don’t understand how Google can justify developing a feature that solves what problem exactly—interacting with other people in public? Carrying a selfie stick? It’s an interesting feature idea, to be sure, and a good showcase of AI’s image manipulation powers, but I find it difficult to believe that “getting everybody into the group shot” is a pressing, real-world issue among many of Google’s users.

Magic Editor is another camera-based AI-enhanced Android feature that feels increasingly gimmicky. In Tuesday’s example, Kenny Sulaimon, Google’s Pixel Camera Product Manager, walked the Made by Google audience through the feature’s latest round of updates. These updates will allow you, as a Pixel 9 owner, to reframe photos, have the AI suggest optimal crops, and expand backgrounds using generative AI. It also allows you to “reimagine” specific areas and aspects of a photo, such as changing a field of grass to a field of wildflowers or adding additional elements to the picture’s background.

AI is continually touted as a revolutionary technology that will help us cure intractable diseases , discover novel drug and protein structures , and solve the deepest mysteries of the universe. But the best Google seems to be able to do is give the public an easier way to add AI-generated hot air balloons to images like they’re 21st century clip art. What a gyp.

You can see the good intentions behind both the new Pixel Screenshots and Call Notes features. The former uses AI to process screenshots to help you easily find them later using text prompts, and the latter provides you with an AI-generated summary of a phone call’s most pertinent topics and information. However, the potential privacy implications of their use gives me pause.

Justin Duino / How-To Geek

Screenshots bears more than a passing resemblance to the defunct Microsoft Recall feature that was recently shut down amid personal data privacy outcries by users—though, unlike Recall, it only ingests images taken directly by the user and can be manually toggled. Call Notes users will have to give Google real-time access to their phone calls in order for the feature to work. This opens up yet another source of your personal data that can (and likely will at some point) be leaked online. It’s not like the AI industry has a particularly stellar track record for maintaining data security .

Consumers Need a Focus on Data Security More Than Gimmicks

You’d think the industry would, given how much of your data it needs in order to operate at the levels it’s been hyped to. From the end user features themselves to the large language models that they’re built atop, today’s AI systems demand massive amounts of data, often scraped from the public internet without authorization, in order to perform their inference operations with a sufficiently high degree of accuracy. But the more data that hyperscalers like Google, Microsoft or AWS collect, the more likely that that data could be accessed by unauthorized users, whether they’re Google employees and contractors, third-party hackers, or law enforcement personnel.

While I do think that Google is generally on the right track with its AI development, I can’t help but notice the scattershot nature of the features shown off on Tuesday and how each one needs access to a different bit of my personal data. I’m a fan of integrating Gemini with Workspace, but it feels like Google is still throwing ideas at a wall and seeing what gimmick sticks best instead of purposefully building AI features that will create real change in people’s lives. Perhaps Google’s upcoming Project Astra , which will allow an AI to use your phone’s camera to observe, analyze, and understand the world around it, will prove to be the killer app that the AI industry needs to keep its bubble from bursting .

Also read:

- [New] 2024 Approved Channel Success Strategy Standard Studio or Beta Edge

- [New] Explore Best 15 Cost-Free Photo Editors

- [New] Film Enhancements 15 Best LUTs to Boost GoPro Cinematography

- [Updated] PixelMaster Elite OS X/Win Edition

- 2024 Approved From Undiscovered to Famous The SEO Playbook for Your Podcast

- Complete Guide: Downloading the Latest ASUS Wireless Network Drivers

- GoPro Hero Vs. Yi 4K Which Takes the Prize in Latest Tech, In 2024

- How to Get Your Razer Kraken Microphone Up and Running Again

- In 2024, Simple and Effective Ways to Change Your Country on YouTube App Of your Vivo Y56 5G | Dr.fone

- Rumored Details Emerging for Samsung Galaxy Z Flip 7 – Price Estimates, Availability Date & Tech Features

- Title: Enhancing Google's Core Functionality Over Emphasizing Artificial Intelligence

- Author: Frank

- Created at : 2025-02-15 18:35:59

- Updated at : 2025-02-19 18:19:48

- Link: https://some-techniques.techidaily.com/enhancing-googles-core-functionality-over-emphasizing-artificial-intelligence/

- License: This work is licensed under CC BY-NC-SA 4.0.