Demystifying AI Imagery: Understanding That Not All Visuals Come From AI Technology

Demystifying AI Imagery: Understanding That Not All Visuals Come From AI Technology

Quick Links

- AI Media Is So Real, We Need Help Distinguishing It From Reality

- Meta’s Labeling Lumps AI-Generated and AI-Assisted Together

- Is There a Difference Between AI-Generated and AI-Assisted?

- Meta’s AI Labeling Is Not Foolproof

- New Standards for Photography Are on the Horizon

Meta recently rolled out a new “Made with AI” tag to help users distinguish between real and AI-generated content. The problem is that the tag appears indiscriminately on images created entirely with AI and those merely edited with AI. This has sparked a debate about how much AI is enough to taint an image and introduced even more confusion about what is real and what is not.

AI Media Is So Real, We Need Help Distinguishing It From Reality

Do you remember that photo of the Pope in the stylish puffer jacket? At first glance, it seemed perfectly normal, and we all thought we had just been sleeping on the pope’s swag. However, it was later revealed that the photo was AI-generated.

Going over it a second time with a fine-toothed comb, you can tell that the image is AI-generated , but that’s exactly the problem—no one is browsing the web with that much intensity.

With the proliferation of AI image-generation services, there are thousands, if not millions, of AI-generated images circulating the web. Facebook is already swamped with tons of AI-generated images , and other social media platforms are not far behind.

Most people will miss the signs that these images are AI-generated and take them as an accurate representation of reality. With the election season coming up and in an age where misinformation and lies are so widespread, the stakes have never been higher to be able to tell fabrication apart from reality.

With this in mind, Meta has started labeling AI-generated images uploaded to Facebook, Instagram, and Threads to help people tell them apart with ease. However, not everyone is happy about the way they’ve executed this plan.

Meta’s Labeling Lumps AI-Generated and AI-Assisted Together

The uproar around Meta’s AI labeling stems from the fact that the company does not discriminate between using AI to generate photo-realistic images and using an AI process to edit a photo . As far as Meta is concerned, both fall into the same category.

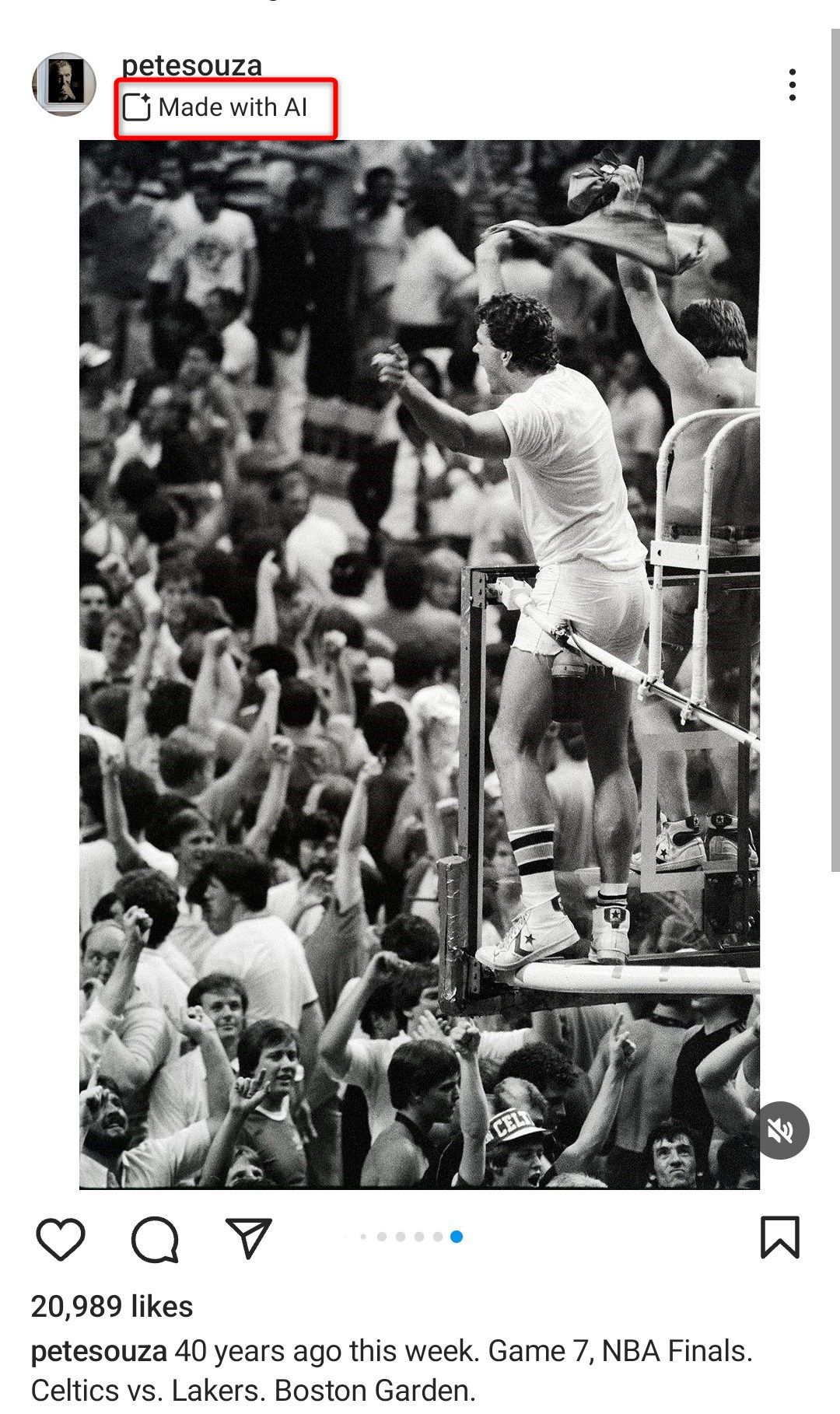

Many aggrieved photographers have taken to social media to share examples of this happening to them. Former White House photographer Pete Souza complained that he was unable to uncheck the “Made with AI” box when posting a photo he took of a basketball game.

Another photographer, Peter Yan, had his image of Mount Fuji tagged “Made With AI” because he used a generative AI tool to remove a trash bin in the photo.

Is There a Difference Between AI-Generated and AI-Assisted?

The crux of the debate around Meta’s AI tag is whether it is fair to label images as “Made With AI” even when they have only been minimally edited with generative AI tools.

Understandably, photographers who use generative AI tools don’t want the label “Made With AI” anywhere near their work. The term is loaded, suggesting the scene is fabricated and implying they are profiting off the work of others, given that many AI programs are trained on copyrighted works without consent. Moreover, having their work labeled as “Made With AI” undermines the effort and skill involved in creating the final photo.

On the other side of the fence, those in favor of Meta’s labeling argue that using any generative AI tool, no matter how small, introduces AI into the mix. Therefore, the photo is no longer an accurate representation of the scene it captured and should technically fall into the category of “Made With AI.”

The anti-labelers counter that people have been editing photos forever—is there really a difference between removing the background of a photo with AI versus using the magic wand? If Meta cares so much about portraying reality exactly as it is, then shouldn’t there be a tag identifying when a photo has been altered in any way?

They go on to point out that AI tools are now baked into everything from the “Space Zoom” on the Galaxy S20 Ultra to industry-standard photo editing apps like generative fill in Adobe Photoshop . By labeling images with minor AI adjustments as “Made With AI,” Meta creates a “boy who cried wolf scenario” where innocent images get labeled as “Made With AI,” causing people to lose trust in the label and making them more susceptible to the actual misinforming AI content.

Other people try to find a middle ground, suggesting a separate “AI-assisted” tag for images that have only been minimally altered with AI. However, that idea immediately runs into difficulties. How much AI modification should be allowed until a photo is considered “Made with AI?” And would such a system even serve any purpose? Couldn’t a malicious actor just alter a small part of an image with AI to create a misleading scene?

Meta’s AI Labeling Is Not Foolproof

The entire “Made With AI” debate may be a moot point anyway since anyone who wants to could just avoid the tag entirely. Meta relies on information in the photo metadata to identify AI processes, and it is trivially easy to remove it. PetaPixel found in its testing that simply copy-pasting the image into a blank document was enough to get around the tag, and posting a screenshot of the image also avoids the tag.

The reliability of the labeling is also suspect, as there are several obviously AI-generated images on Meta’s platforms without the tag. In contrast, other images without any AI are slapped with it.

New Standards for Photography Are on the Horizon

Despite the current messy state of things, there is hope on the horizon. Many of the big digital media players are rethinking photos and videos with a greater focus on their provenance and authentication. I suspect that the field will see a massive shake-up in the coming years. Already, there are new initiatives for authenticating digital media, the biggest of which is the Coalition for Content Provenance and Authenticity, or C2PA for short.

The C2PA is a joint project between big tech companies like Adobe, Arm, Intel, Microsoft, and Google, that aims to provide context and history for all digital media. The idea behind it is that from the moment of capture, a digital record is created and bound to all media, detailing where it was taken, on what camera, and by whom. Every subsequent edit made to the subject is also added to this record, and all this information is then provided to the end viewer, ensuring they are always aware of the photo’s provenance.

The C2PA is still in its early stages, but new cameras supporting the standard are already being produced, and I suspect it won’t be long before it becomes the norm.

It’s getting harder than ever to tell what’s real from what’s AI-generated, and Meta’s new label certainly isn’t helping matters. Initiatives like the C2PA are a light at the end of a dark tunnel, but they are still a while away from general adoption.

It falls to you to evaluate the media you consume with a critical eye to make sure that what you’re seeing is authentic. Question the source, seek additional information, and remember that not all “Made With AI” images are made by AI.

Also read:

- [New] Instagram Groups A Step by Step Simplified Guide

- [Updated] 2024 Approved Mastering the Art of Soundtrack Posts A Copyright Primer for Insta

- [Updated] Expert Audio Techniques in Audacity for Professionals

- [Updated] Highlighting Excellence in 8 3D Websites with Gold Effects

- [Updated] In 2024, Beginner’s Blueprint for YouTube Success Setting Up & Making Money

- 2024 Approved Exclusive Discoveries Prime Websites & Methods to Download Tamil Ringtone Files

- 2024 Approved Social Sightings Exploring the Most Engaged Twitters

- Discover the Reasons Behind Missing Saved Documents in Microsoft Word

- Diving Deep Into Your Twitter Archive

- Eye-Catching Narrative Starter Gadget for 2024

- Hide Identity Blurred Face Artistry for 2024

- How to Unlock Tecno Pop 7 Pro Phone Pattern Lock without Factory Reset

- In 2024, Excellent Choices Best Windows 8 Podcasting

- In 2024, Explore the Peak TV Service Providers Comparative Insights

- In-Depth Exploration of Final Cut Pro’s Powerful Tools and Features for 2024

- Prevent Cross-Site Tracking on Samsung Galaxy S24 and Browser | Dr.fone

- Solve Unresponsive Face ID Problems on iPhones with These 14 Key Fixes

- Title: Demystifying AI Imagery: Understanding That Not All Visuals Come From AI Technology

- Author: Frank

- Created at : 2025-02-14 19:50:24

- Updated at : 2025-02-19 16:42:12

- Link: https://some-techniques.techidaily.com/demystifying-ai-imagery-understanding-that-not-all-visuals-come-from-ai-technology/

- License: This work is licensed under CC BY-NC-SA 4.0.